Implementing an enterprise service bus (ESB) is not an easy task even for integrators who have deployed dozens of similar solutions. We need to determine which architecture is suitable for a particular project, find an effective way to transfer data, and understand how best to implement services within the bus.

In 2022, the KT.team learned from experience that if you don't follow the processes and ask yourself and the customer the right questions at the analytics stage, even understandable integrations can be done incorrectly. We quickly launched the first version of the startup tire and then redesigned it twice. We will tell you why this happened, what we have learned and how not to repeat our mistakes.

A little bit of context

Last year, KT.team introduced a service tire for a startup”ATIMO”, who created an app for automatically obtaining waybills for drivers.

ATIMO works with taxi companies and fleets that do not want to employ mechanics and doctors. Such companies use automated checkpoints for checking the technical condition of cars and the health of drivers. At these points, a technician and a doctor conduct examinations and upload the results to the ATIMO system for processing. The taxi fleet sends information about drivers and cars to ATIMO, and in response receives a ready-made waybill in the app.

So far, there were only two taxi companies, each of them connected directly to the system, but future scaling made ATIMO think about changing the architecture of its IT solution.

At this stage, ATIMO contacted KT.team. They needed a service bus, software that flexibly integrates different systems. The tire was supposed to exchange data between the taxi fleet, technical and medical examination points and ATIMO databases without overloading the server.

The first mistake: build a bus architecture similar to direct integration

What we did

The bus architecture was built in a similar way to a direct connection: they created a service that requested data from each database every 20 minutes in turn.

This architecture had a big advantage: it required almost 10 times less server resources. If, when connected directly, each request “ate” up to 4 GB of memory, in ESB 1.0, one request took about 500 MB.

What's the mistake

At the start of the implementation, it was only necessary to connect two taxi fleets to the bus, so the KT.team decided to follow a fairly simple path. ESB 1.0 worked for six months, during which new taxi companies began to be connected to the ATIMO system. When their number grew to 10, it became clear that the tire was absolutely not resistant to failure.

The problem was caused by the initial incorrect bus architecture: the more databases are connected to it, the higher the risk of failure. The databases were polled sequentially, and if one of them did not answer or cut off the connection, the bus stopped working, that is, if a failure occurred when connecting to the very first taxi fleet, all other taxi companies were also left without waybills.

In addition, a message broker was used to transfer information to ESB 1.0. The tire sent data from the taxi fleet to the ATIMO system and back as it was, without processing it in any way. The exchange was not always correct, and part of the message could be lost.

How should I have done it

Using separate services for each connection will make the architecture less connected: a failure in one database will not affect data exchange with others. This architecture will be more resilient.

To make data transfer more stable, it is better to use internal storage — the bus's own databases. The tire will receive data from taxi fleet databases and ATIMO and store it at home. This will make the transmission faster and more stable.

The second mistake: fighting the symptoms rather than solving the main problem

What we did

The KT.team planned to use several data collection services in ESB 2.0 instead of one and two internal databases instead of a message broker.

As a result, we implemented an interim solution: we left one service for all taxi companies, but completely changed the logic of data exchange. The message broker was replaced by databases: one for information about cars and drivers, the other for ready-made waybills. And already from these databases, the tire transmitted data on request to the ATIMO system or the taxi fleet.

This decision was made for two reasons. Firstly, the new version of the tire required more resources, because all taxi fleets had to be processed simultaneously, not one by one. Secondly, the customer's priority was to quickly implement changes, and it would take some time to completely redesign the architecture.

In addition, ATIMO was asked to make the survey of taxi companies more frequent, with new data being uploaded every minute. The project team also fulfilled this requirement: technically, the tire can run even faster.

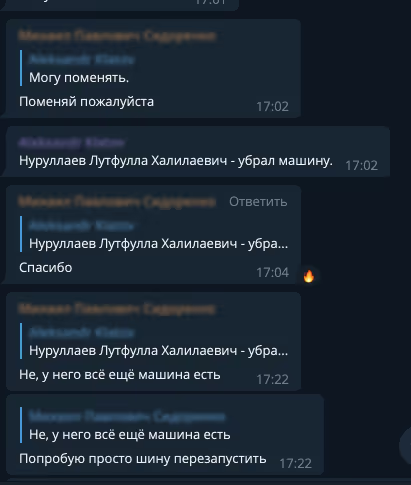

However, taxi fleet databases have not been able to cope with this speed anymore. For one particularly slow database, it was necessary to artificially limit the number of requests, as it saw every minute connections as a DDoS attack — and blocked the bus.

The update was implemented, and ATIMO immediately noticed an improvement. The tire worked smoothly, and there were practically no requests for technical support. The solution turned out to be economical in terms of memory consumption and at the same time quite stable.

What's the mistake

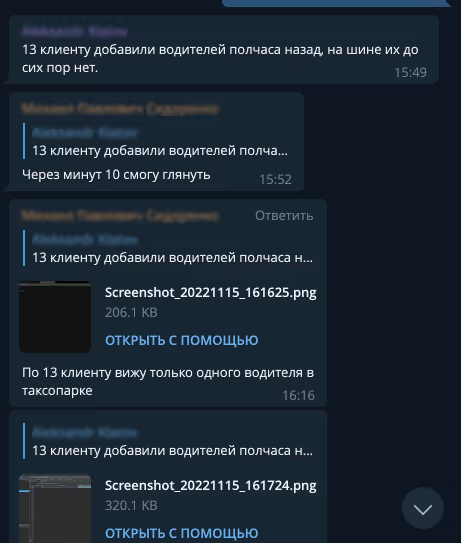

At first glance, everything was fine: ESB 2.0 worked stably and new taxi companies were successfully connected to it. About a month later, there were already 15.

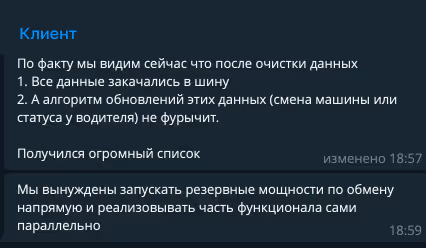

The problem was that the ESB architecture remained incorrect: one service polled all 15 databases sequentially. At the same time, the ATIMO system continued to grow, and it was clear that sooner or later the tire would start to fail again.

The error with this version was that the project team focused on saving server resources and reducing development time. The key architectural problem of ESB 1.0 was also preserved in ESB 2.0, so the tire had to be modified again.

How should I have done it

For ESB to be truly fault-tolerant, it is necessary to implement the right architecture. The best solution in this situation is to discuss realistic development deadlines with the customer. A full upgrade takes time, but it also provides a scalable and reliable data bus.

The KT.team already knew what needed to be changed, so they didn't wait for a request from ATIMO or an ESB failure and redesigned the tire on their own initiative.

Final ESB: reliable, stable and fast

What we did

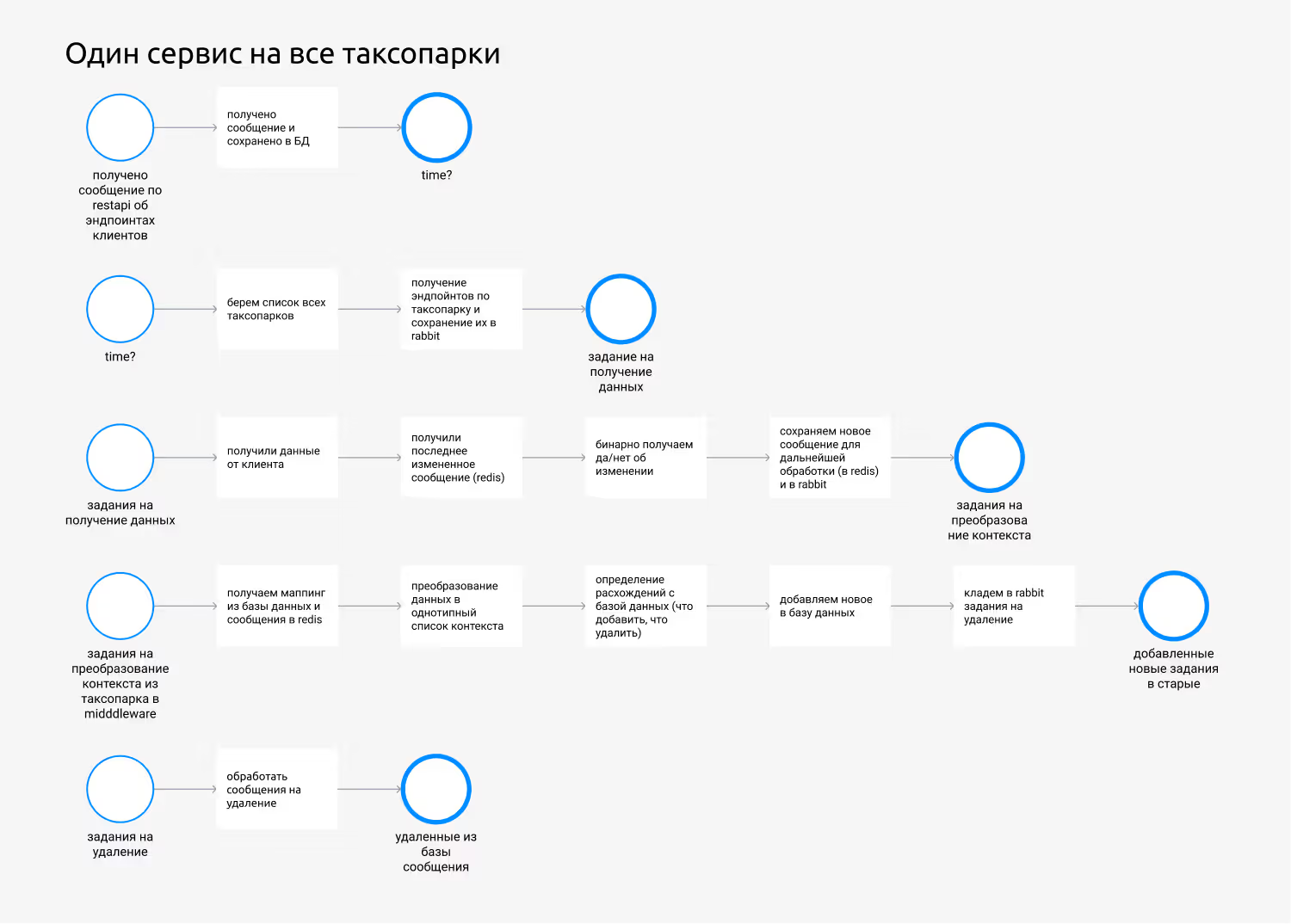

In ESB 3.0, we implemented an architecture that was abandoned in the previous version, with separate services for each database. All services work the same way:

- connect to the taxi fleet database;

- download data from it;

- lead them to the format used by the ATIMO system (mapping rules have been set up for this purpose);

- write new data to the repository;

- delete irrelevant data.

To prevent services from wasting a lot of server resources, they were made as simple as possible: all unnecessary and resource-intensive steps, such as logging exceptions, were removed. Instead, the data in the vault is rewritten every time you successfully connect to the taxi fleet database. A survey by one taxi company now requires about 300 MB of memory (instead of 4 GB at the start of the project).

The final version of the service tire was rolled out shortly before 2023. After that, KT.team did not receive a single request for technical support until the end of January. And the first question from ATIMO did not concern the reliability of the service tire at all.

The fact is that ESB 3.0 has a useful feature: you can create a new connection point for a taxi fleet by simply cloning from any existing one. Then you need to set a few settings and ESB will be able to get the data you need. This solution made the tire scalable — ATIMO does not need to contact technical support to add a taxi fleet.

The KT.team has compiled step-by-step instructions to make the process clearer. This was the subject of the first question from ATIMO: the description of one of the steps was not entirely clear. They answered the question, the text was corrected, and now adding a new taxi fleet takes only a couple of hours instead of a few days, as it was before.

What happened in the end

ATIMO is successfully integrating new taxi companies with ESB 3.0 without the help of KT.team. In the first 3.5 months of 2023, the startup team added 10 new integrations on its own, using documentation. Now the system already has 20 taxi fleets, and there are twice as many databases from which the tire collects information about drivers and cars.

The limited resources of the ATIMO server are used sparingly: one request consumes only 300 MB of memory instead of 4 GB when connected directly. It was possible to reduce consumption thanks to a very simple service scheme that polls the taxi fleet database.

The support chat is quiet: the bus performs its functions, and rare questions are related to minor local failures on the side of individual databases. Most often, the issue resolves itself: the taxi company sets up its work on its own side, and the ATIMO system receives updated data.

In this story, the KT.team gained valuable experience and worked out the ESB implementation process. It is important to immediately take into account both future growth and possible points of failure in the system when implementing it correctly. Therefore, it is best to implement such a bus through separate services for each connection, even if there are only two so far.