Most IT products for our customers start with modular development. Some of them will eventually evolve into microservices. The other part doesn't need microservices. In this article, we'll look at why this is so and what criteria help determine whether to implement microservices or should we stick to working with modules?

Microservice and modular systems are types of architecture

IT solutions.

- When working with modules, the boxed version of an existing IT product is being finalized. The boxed version is a monolith, a ready-made system with a core that is delivered to all customers in the same way, “as it is”. The refinement consists in creating modules with missing functionality. New modules are obtained by reusing parts of the monolith (core or other modules). Business logic is written within a monolith: a program (application, website, portal) has one entry point and one exit point.

- When working with microservices, an IT product is created from scratch; it is made up of “bricks” — atomic microservices that are responsible for a separate small process (sending an email, receiving order data, changing order status, creating a customer, etc.).

A set of such blocks is combined by business logic into a common system (for example, using BPMS). Despite the existence of connections, each block is autonomous and has its own entry and exit points.

The advantages of a modular architecture

All CMS (Bitrix, Magento, Drupal, Hybris, etc.), CRM, ERP, WMS and many other systems have boxed versions. They sell well and are in high demand.

Let's consider the reasons why customers most often choose to work with modular architecture and are willing to buy boxed solutions.

1. High speed of implementation

Installing, configuring and filling out manuals for such software takes a little time.

It is realistic for a medium-sized business to get started with the box three to four months after launch.

For small businesses, it might only be a few days.

2. Affordable price

Some products may even be free. Of course, this does not apply to high-load projects and enterprise solutions with complex logic.

3. Ready-made functionality is sufficient for many tasks

The boxed versions contain a lot of ready-made functionality. For non-key business tasks, software out of the box is quite suitable. For example, if you need to install an inventory program, but inventory is not a priority for your business, and you do not plan to make a profit from this action, a boxed solution will be enough for you.

4. Although small, it is variable

Usually, the product out of the box is presented in two or more versions with different functionality. Different modifications are selected for different types of businesses, company sizes and other conditions.

Problems of modular systems

The main problem is that all modular systems are not designed to seriously redefine functionality. They have a box, ready-made modules — that's better to use them.

The closer the project size and the complexity of its customization are to the enterprise level, the more problems there will be with finalizing the modules. Let's talk about the main ones.

Problem number 1. The core of the system becomes a slowdown point, and modularity becomes an unnecessary complication

Let's say your project involves complex warehouse logic. If you've chosen a modular architecture, developers don't just need to create functionality to manage these warehouses — they need to redefine or expand the multiwarehouse module, which, in turn, uses core methods.

At the same time, it is necessary to take into account the complex logic of returns to warehouses: dependence on events from the CRM system, the movement of goods between catalogs, etc. As well as the hidden logic that is associated with the return of funds, bonus points, etc.

When so many redefinitions occur, the monolith changes significantly. It is important to remember that the relationship between the amount of new functionality and the number of modules is non-linear: to add one function, you need to make changes to several modules, each of which changes the way the other works. Or redefine a large number of methods from other modules in the new module, which doesn't change the essence.

After all the changes, the system becomes so complicated that it will take an indecently long number of hours to add further customizations.

Problem #2. The principle of self-documentation is not supported in modular systems

Documentation for modular systems is difficult to keep up to date. There is a lot of it, and it gets out of date with every change. Refinement of one module entails changes in several other documents (user and technical documentation), and all of them need to be rewritten.

As a rule, there is no one to do such work: wasting the time of valuable IT specialists on this means simply draining the budget. Even using documentation storage in code (PHPDoc) does not guarantee its accuracy. After all, if the documentation might differ from the implementation, it's bound to be different.

Problem number 3. Greater code coherence is the way to regression: “change here, fall off there”

The classic problems of modular systems are related to the fight against regression.

TDD development is difficult to use for monoliths due to the high connectivity of different methods (you can easily spend 30 lines of tests, plus fixtures, on five lines of code). Therefore, when fighting regression, it is necessary to cover the functionality with integration tests.

But due to the already slow development (after all, you need to develop carefully to allow for many redefinitions), customers don't want to pay for complex integration tests.

Functional tests are getting as big as they are pointless. They run for hours, even in parallel mode.

Yes, a modern front like PWA can be tested API-functionally. But tests often depend on getting data from an external circuit — and that's why they start to fail if, for example, SAP test is N months behind the product one, and the test 1C sends incorrect data.

When they have to upload a minor edit to a module, developers have to choose between two evils: run a full CI run and spend a lot of time deploying it, or hotfix it without running the tests, at the risk of breaking something. It's quite dramatic when such edits arrive from the marketing department on Black Friday. Of course, regression and human error will happen sooner or later. Is it familiar?

Ultimately, to achieve business goals, the team goes into emergency mode of work, skillfully juggles tests and closely looks at the dashboards from the logs — Kibana, Grafana, Zabbix... And what do we get at the end? Burnout.

You must admit that this regression situation is not like a “stable enterprise”, as it should be in the customer's dreams and dreams.

Problem number 4. Code coherence and platform updates

Another problem with code coherence is the difficulty in updating the platform.

For example, Magento contains two million lines of code. Everywhere we look, there's a lot of code (Akeneo, Pimcore, Bitrix). When adding functionality to the core, it's best to take into account changes in your custom modules.

Here is an example for Magento (there are similar cases in any modular system on large projects).

At the end of 2018, a new version of the Magento 2.3 platform was released. Multiwarehouses and Elasticsearch have been added to the Open Source Edition. In addition, thousands of bugs have been fixed in the kernel and some features have been added to OMS.

What did e-commerce projects that already have multiwarehouses written in Magento 2.2 face? They had to rewrite a lot of logic in order processing, checkout, and product cards in order to switch to boxed functionality. After all, “that's right” — why duplicate the functionality of the boxed version in modules? It is always useful to reduce the amount of custom code in a large project, as all boxed methods take into account these multi-warehouses; updating the box without such refactoring may be pointless (check security issues for simplicity, especially since they can be rolled in without updating).

Now imagine how long would it take to upgrade like this? How can you test this without integration tests, which are difficult to write?

It is not surprising that many people update the platform either without refactoring, but with an increase in duplication, or (if the team wants to do everything “according to feng shui”) with a “refactoring” and putting things in order for a long time.

Problem number 5. Opacity of business processes

One of the most important problems in project management is that the customer does not see all the logic and business processes of the project. They can only be restored from code or from documentation (which, as we said earlier, is difficult to maintain in modular systems).

Yes, Bitrix has a BPM part, and Pimcore has a workflow visualization. But this attempt to manage modules using business processes always comes into conflict with the presence of a core. In addition, events, complex timers, transactional operations — all this cannot be complete in a modular architecture system. Once again, this applies to medium and large companies. For a small company, the capabilities of modular systems are enough. But if we're talking about the enterprise segment, this solution really lacks a single control center where you can go and see any process, any status, exactly how something happens, what exceptions there are, timers, events and crowns. There is not enough ability to change business processes rather than modules. Project process management is hampered by the speed of change and the coherence of logic.

Problem number 6. The complexity of scaling the system

If you deploy a monolith (for example, on Magento, Bitrix, Akeneo, Pimcore, OpenCart, etc.), it will be deployed in its entirety with all the modules on every app server.

More memory and processors are needed, which greatly increases the cost of the cluster.

How microservices relieve customers from the shortcomings typical of modular development. Orchestration of microservices in Camunda and jBPM

Spoiler: the problems listed in the previous paragraph can be solved using BPMS and orchestration of microservice systems.

BPMS (business process management system) is software for managing business processes in a company. The popular BPMS we also work with are Camunda and JBPM.

Orchestration describes how services should interact with each other using messaging, including business logic and workflow. Using BPMS, we don't just draw abstract diagrams — our business process will be executed according to the drawn ones. What we see in the diagram is guaranteed to correlate with how the process works, what microservices are used, what parameters, and which decision tables are used to select a particular logic.

An example is a common process — sending an order for delivery.

Upon any message or direct call, we start processing the order with the process of choosing a delivery method. The logic of choice has been set.

As a result, processes, services and development:

- become quick to read;

- self-documenting (they work exactly as they are drawn, and there is no desynchronous relationship between documentation and actual code work);

- simply debuggable (it's easy to see how a particular process is going and understand what the mistake is).

Let's get acquainted with the principles by which business process management systems operate.

BPMS Principle No. 1. Development becomes visual and process-based

BPMS allows you to create a business process in which the project team (developer or business user) determines the sequence of launching microservices, as well as the conditions and branches along which it is moving. At the same time, one business process (sequence of actions) can be included in another business process.

All this is clearly presented in BPMS: you can watch these schemes in real time, edit them, and upload them to production. The principle of a self-documented environment is fulfilled here as much as possible — the process works exactly as it is visualized!

All microservices become process cubes that can be added from the toolbox to a business user. The business manages the process, and the developer is responsible for the availability and correct operation of a particular microservice. At the same time, all parties understand the general logic and purpose of the process.

BPMS Principle No. 2. Each service has clear inputs and outputs

The principle sounds very simple, and an inexperienced developer or business user might think that BPMS does not improve the very strategy for writing microservices. Like, even without BPMS, you can write normal microservices.

Yes, it is possible, but it is difficult. When a developer writes a microservice without BPMS, they inevitably want to save on abstractness. Microservices are becoming frankly large, and sometimes they are starting to reuse others. There is a desire to save on the transparency of the transfer of results from one microservice to another.

BPMS encourages writing in a more abstract way. Development is carried out precisely by process, with the definition of input and output.

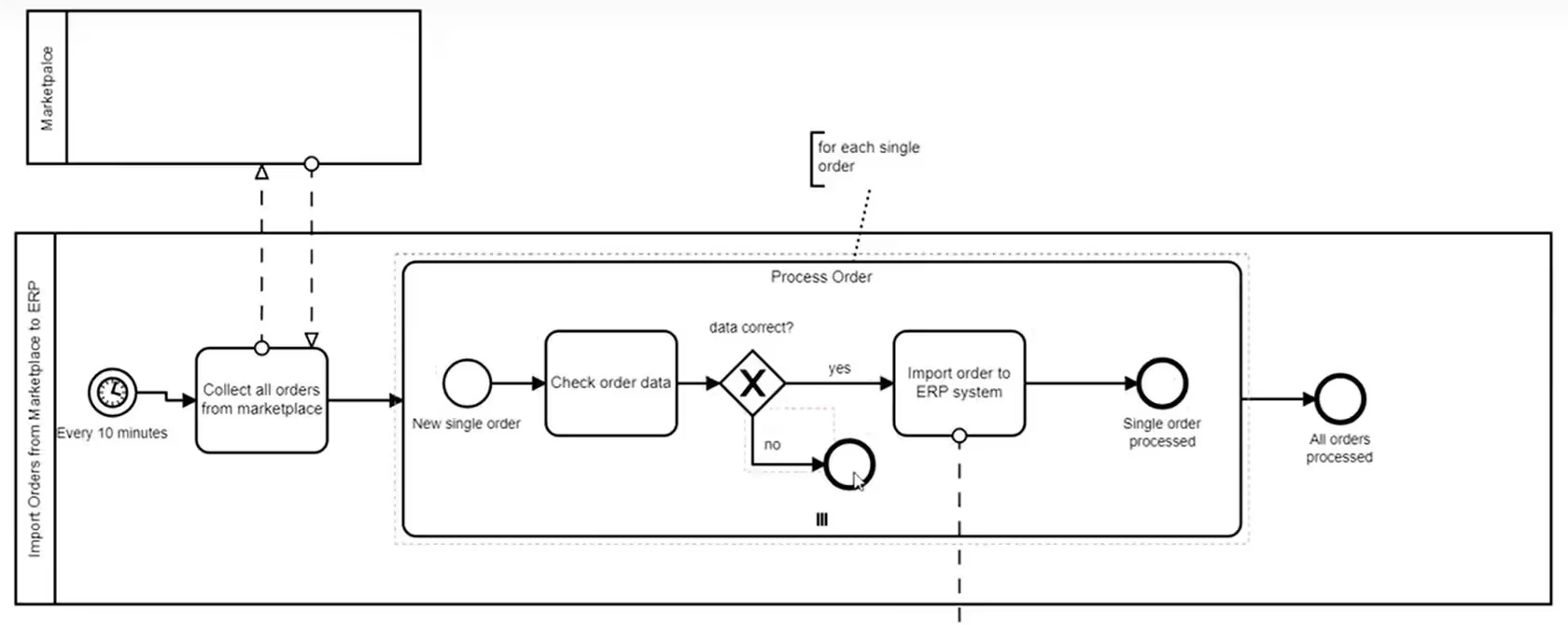

BPMS Principle No. 3. Parallelizing queue processing

Imagine the process of processing orders: we need to go to a marketplace, pick up all the good orders and start processing them.

Look at the diagram (part of the diagram). It states that every 10 minutes we check all marketplace orders, then start processing each order in parallel (as indicated by the vertical “hamburger” in Process Order). If successful, we transfer all data to ERP and complete processing.

If we suddenly need to raise the processing logs for a specific order, in Camunda, JBoss or any other BPMS, we can fully recover all data and see what queue it was in and with what entry/exit parameters.

BPMS Principle No. 4. Mistake and escalation

Imagine that an error occurred during the delivery of the item. For example (by the way, this is a real case), the transport company picked up the order, and the warehouse then burned down. Another true story: New Year's Eve rush, and the goods were first delayed, and then maybe they were lost.

In this case, BPMS uses the mouse to click events, such as notifying the customer if the delivery time has passed.

If we receive an error from the transport company inside, we can start the process on our own branch and interrupt everything: notify us, give a discount on the next order, and return the money.

All such exceptions are not only difficult to program outside of BPMS (for example, a timer in a timer), but also to understand in the context of the entire process.

BPMS Principle No. 5. Selecting actions for one of the events and interprocess options

Imagine the same order in delivery.

In total, we have three scenarios:

- the goods were delivered as expected;

- the goods were not delivered as expected;

- the item was lost.

Right in BPMS, we can determine the procedure for shipping goods to a transport company and wait for one of the following events on request:

- successful delivery (messages from the product delivery process that everything has been delivered);

- or the arrival of a certain time.

If time hasn't passed, you need to launch another service: analyzing this particular order with the operator (you need to assign him a task in the OMS/CRM system to investigate where the order is) and then notify the customer.

But if the order was delivered during the investigation, you must stop the investigation and complete the order processing.

In BPMS, all interrupts and exceptions are on the BPMS side. You don't overload the code with this logic (and the mere presence of such logic in the code would make microservices large and poorly reused in other processes).

BPMS Principle No. 6. In your Camunda you will see all the logs

Using events and the interprocess option, you can:

- you can see the entire sequence of events in one window (it's easy to see what happened to the order, what exception thread it went through, etc.) );

- you can collect all BI analytics based on BPMS logs alone (without the need to overload microservices with logging events).

As a result, you will be able to collect statistics specifically on processing problems, the speed of transitions, and all processes across the company. Logging information becomes unified, and it becomes easy to link a delivery event to an operator's action or an event in any other information system.

Note the difference with the modular system. You can also create universal logs there. But when interacting with other systems, you will need to do something about unifying logging in them as well, and this is not always possible.

Results of comparing microservice and modular architecture

Each type of architecture has its own advantages and disadvantages. There is no universal solution. For small businesses or with very few customizations, a modular approach would be more appropriate. It is important to look for a suitable technical solution rather than promoting a specific platform or approach.

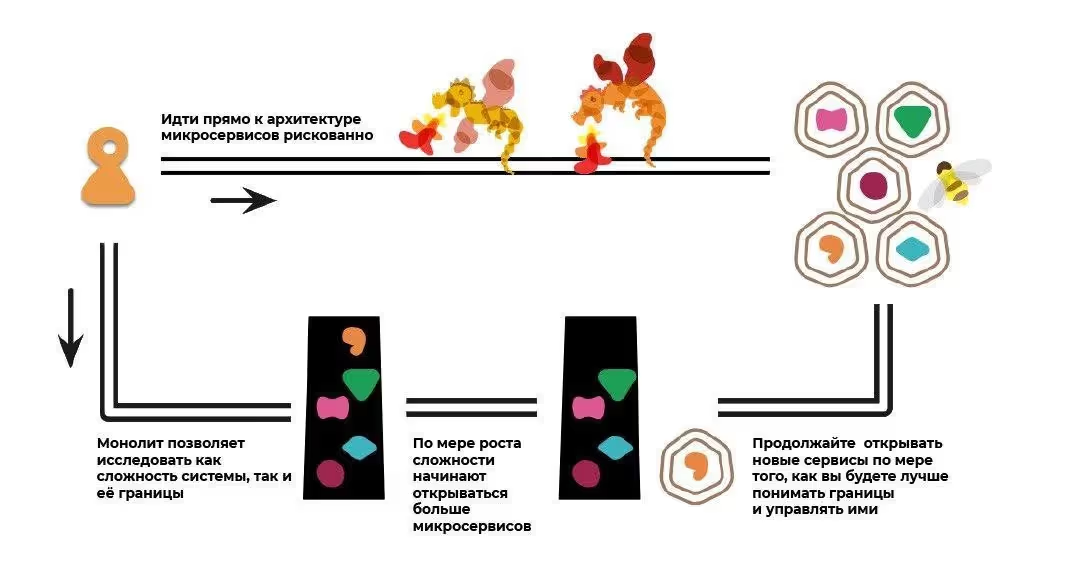

Let's look at how different types of architecture change depending on the life span of the project.

At the start of the project:

- with microservices — you have zero functionality, and you need to write all of it to get started;

- with a modular system — a large amount of functionality is immediately available to you from the boxed version, and you can start using the product soon after purchase.

After the first three to four months of development (this is the average release time for the first MVP) and beyond:

- with microservices — the amount of functionality is gradually equalizing compared to the boxed version. For medium-sized businesses, the microservice architecture will catch up with the modular architecture quite quickly, and for large businesses — instantly. And in the future, the maintenance and development of the modular system per unit of functionality will increase;

- with a modular system — the speed of functional development will be significantly lower than in microservices.

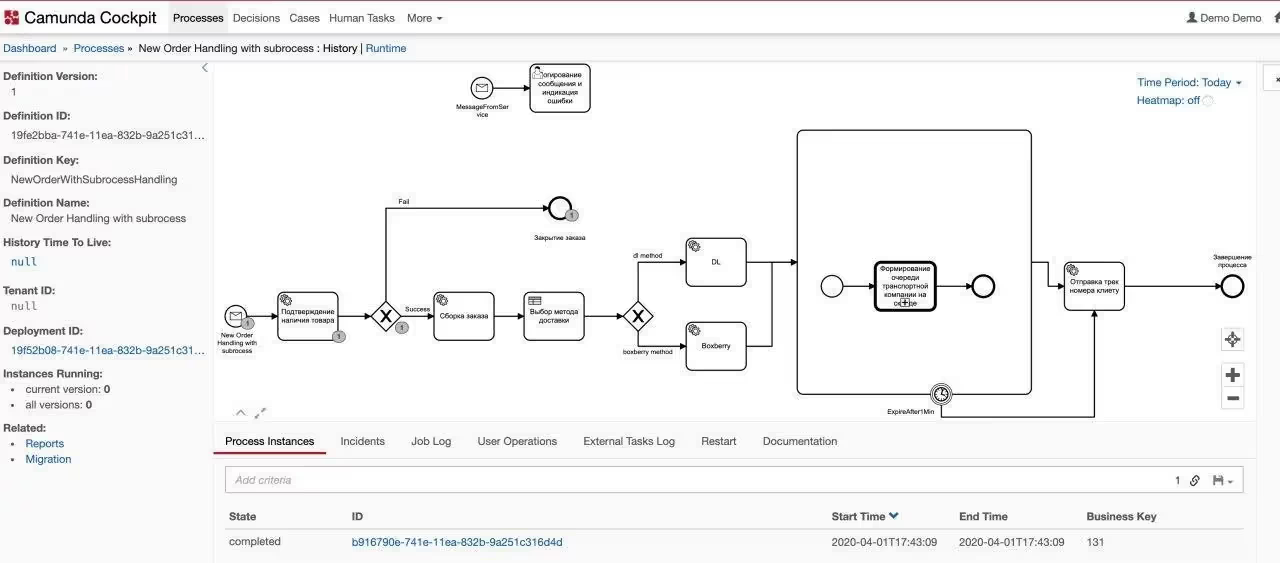

Finally, let's take a look at what microservices orchestration looks like using specific examples.

Service orchestration examples

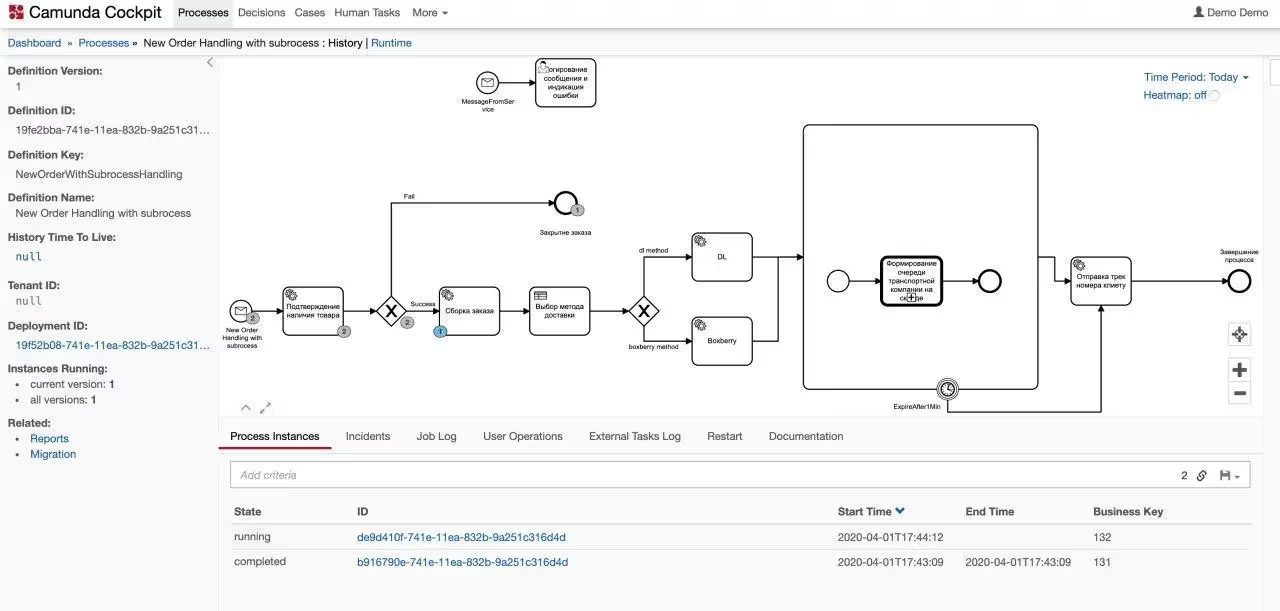

Let's look at orchestrating services using Camunda. Using the following images, you can see how convenient it is to manage microservices using BPMS with an orchestrator. All processes are clear and the logic is obvious.

The business processes look like this:

Example (order, availability service):

It can be seen that this order had a “No product” thread.

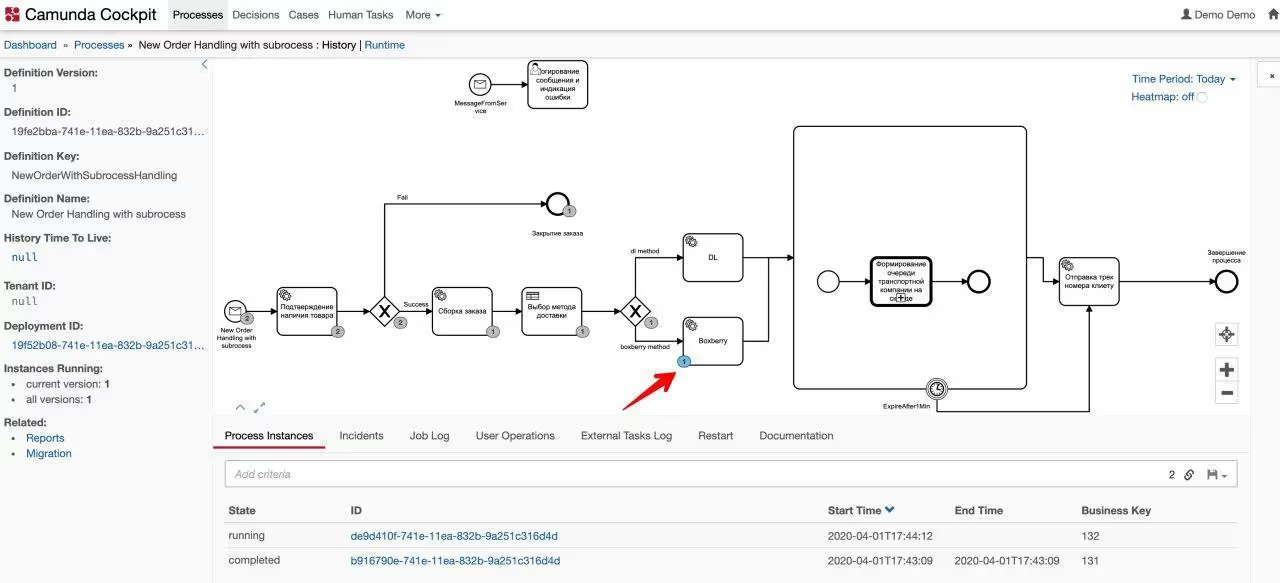

Another copy of the order (assembled):

The order went further and, according to the solution table (DMN), went to the processing branch by a specific delivery service operator (Boxberry):

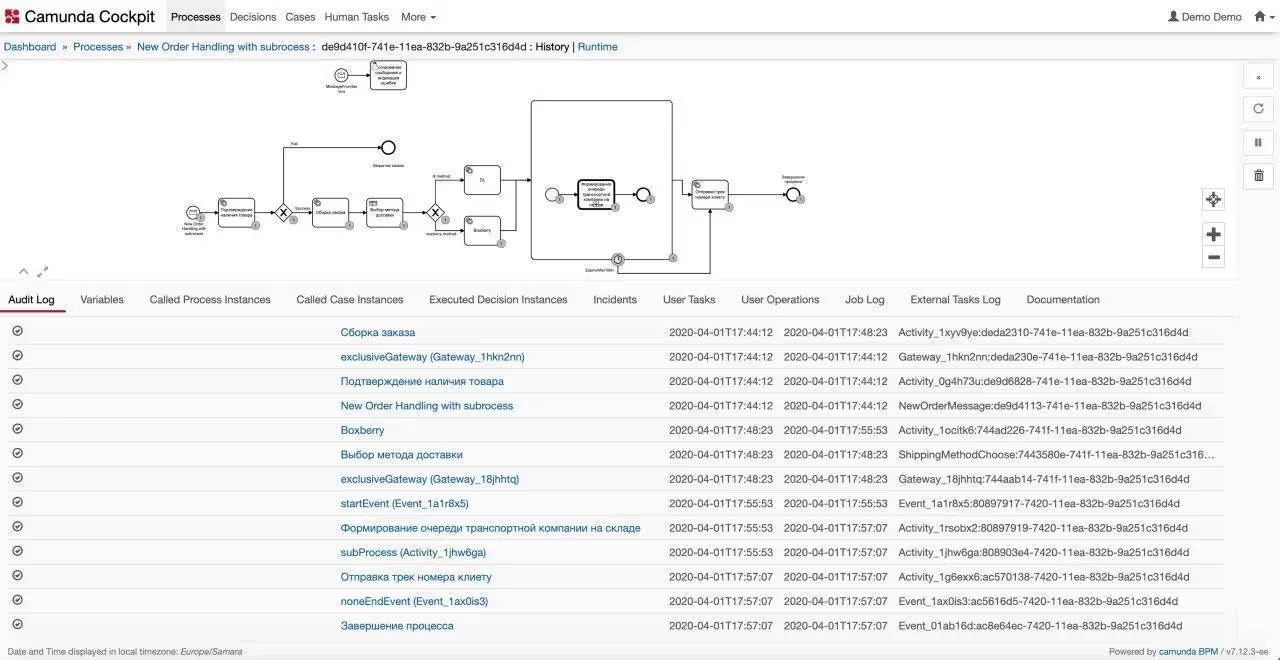

Care for the invested process:

The embedded process worked:

Business process history:

The properties of this visualization are:

- business processes are easy to read even by an untrained user;

- they are executable, that is, they work exactly as they are drawn; there is no out-of-sync between “documentation” and the actual work of the code;

- the processes are transparent: it is easy to see how a particular import, order, processing took place, it is easy to understand where the mistake was made.

See also: